Table of Contents

ToggleIn a world where everyone’s trying to save the planet, it’s a bit shocking to discover that ChatGPT seems to be throwing a wild energy party. But why does this AI marvel guzzle so much juice? It’s not just because it loves to chat—it’s a complex dance of algorithms and data processing that requires some serious computing power.

Understanding Energy Consumption in AI

Energy consumption in artificial intelligence stems from the extensive computational power needed for training and running models. Models like ChatGPT rely on complex algorithms that process massive amounts of data, driving significant energy requirements.

Overview of AI Models

AI models typically consist of numerous layers and parameters. Each layer contributes to learning and understanding data patterns. Training these models requires substantial electricity to power the servers and data centers involved. Various factors influence energy use, including model architecture and the dataset’s size. As models grow in complexity, energy consumption rises, demanding more energy-efficient technologies for sustainable operation.

Specifics of ChatGPT

ChatGPT exemplifies high-energy consumption due to its architecture and operation. Once trained, the model utilizes significant computational resources for real-time responses. The interaction requires processing user inputs through layers of neural networks, which continuously consume power. Each query leads to various calculations, heightening the energy draw. Efficiency measures, such as optimized server usage, play a crucial role in managing this demand. However, the overall energy footprint remains considerable, reflecting the intensity of artificial intelligence applications today.

Factors Contributing to Energy Use

ChatGPT’s energy consumption arises from multiple factors, primarily its model size and the operations of data centers.

Model Size and Complexity

Model size drives energy demand significantly. Larger models consist of more parameters, which require extensive computation during both training and inference. Each layer of the neural network necessitates powerful hardware to perform calculations, resulting in greater electricity usage. An increase in parameters often correlates with increased energy consumption, as deeper models process more complex data. Additionally, training these models involves multiple iterations on vast datasets, adding to the total energy footprint. For instance, a model with 175 billion parameters, such as ChatGPT, requires considerable resources, demonstrating the link between complexity and energy use in AI.

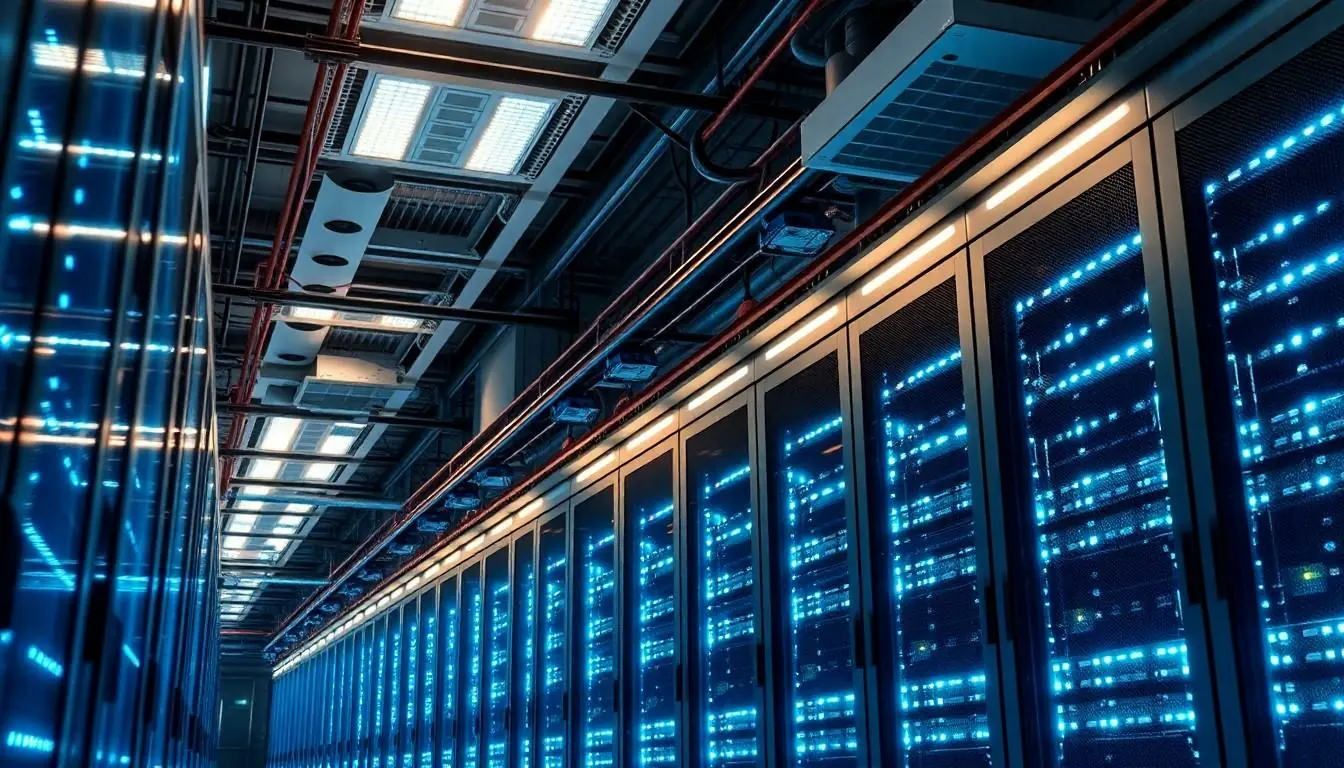

Data Center Operations

Data center operations contribute heavily to overall energy consumption. High-performance servers operate continuously to handle the demands of AI models like ChatGPT. Cooling systems also run to maintain optimal temperatures, consuming additional power. Efficiency varies widely among data centers, affecting total energy usage. Factors like geographical location, power sources, and technology utilized influence energy efficiency. More sustainable practices in server management can mitigate energy demand. Implementing renewable energy sources in these centers is vital for reducing their environmental impact. As operations scale up and complexity increases, energy usage in data centers remains a crucial consideration.

Comparison with Other AI Models

Different AI models exhibit varying energy consumption levels. While ChatGPT demands considerable power due to its complexity, some alternative models focus on improving energy efficiency.

Energy Efficiency of Alternative Models

Alternative AI models often incorporate optimizations that reduce their overall energy consumption. For example, models with fewer parameters can operate effectively using less computational power. Techniques like knowledge distillation and pruning help streamline larger models without sacrificing performance. Several smaller models achieve comparable results with a fraction of the electricity. Additionally, some companies emphasize energy-efficient hardware specifically designed for AI tasks, further lowering the carbon footprint.

Case Studies in Energy Consumption

Numerous case studies illustrate differences in energy consumption among AI applications. For instance, Google’s BERT operates with fewer resources compared to ChatGPT, demonstrating a lower environmental impact during processing. In contrast, research indicates that training a single instance of ChatGPT can consume nearly 4,000 kilowatt-hours, equating to the carbon footprint of driving a car for over 9,000 miles. These comparisons highlight the pressing need to develop AI solutions that balance performance and energy efficiency in order to support sustainable practices in technology.

Mitigating Energy Usage

Mitigating energy consumption in AI models like ChatGPT involves various strategies aimed at enhancing efficiency.

Techniques for Optimization

Techniques for optimization include knowledge distillation, which compresses larger models into smaller, more efficient versions. Pruning bypasses unnecessary parameters, reducing computational requirements while maintaining performance. Furthermore, leveraging quantization simplifies data representation, lowering energy demands. Implementing these methods enables AI models to yield similar results with significantly less energy.

Future Trends in AI Efficiency

Future trends in AI efficiency focus on improving energy-aware architectures. Researchers prioritize developing models that require less computational power while enhancing their capabilities. Innovations in hardware, such as custom AI chips and efficient cooling systems, promise to further decrease energy usage. Adoption of renewable energy sources in data centers stands out as a pivotal approach to minimize carbon footprints associated with AI operations.

ChatGPT’s energy consumption highlights a critical challenge in the advancement of artificial intelligence. As AI models become more complex the demand for computational power and energy increases significantly. This trend emphasizes the importance of developing strategies that promote energy efficiency without sacrificing performance.

Innovative approaches such as knowledge distillation and the use of renewable energy sources in data centers can play a vital role in reducing the environmental impact of AI technologies. Moving forward the focus must be on creating sustainable AI solutions that balance operational demands with ecological responsibility. By prioritizing energy efficiency the tech industry can contribute to a greener future while continuing to push the boundaries of AI capabilities.